Thursday, December 9, 2021

Squeezing data center AI into a tiny microcontroller

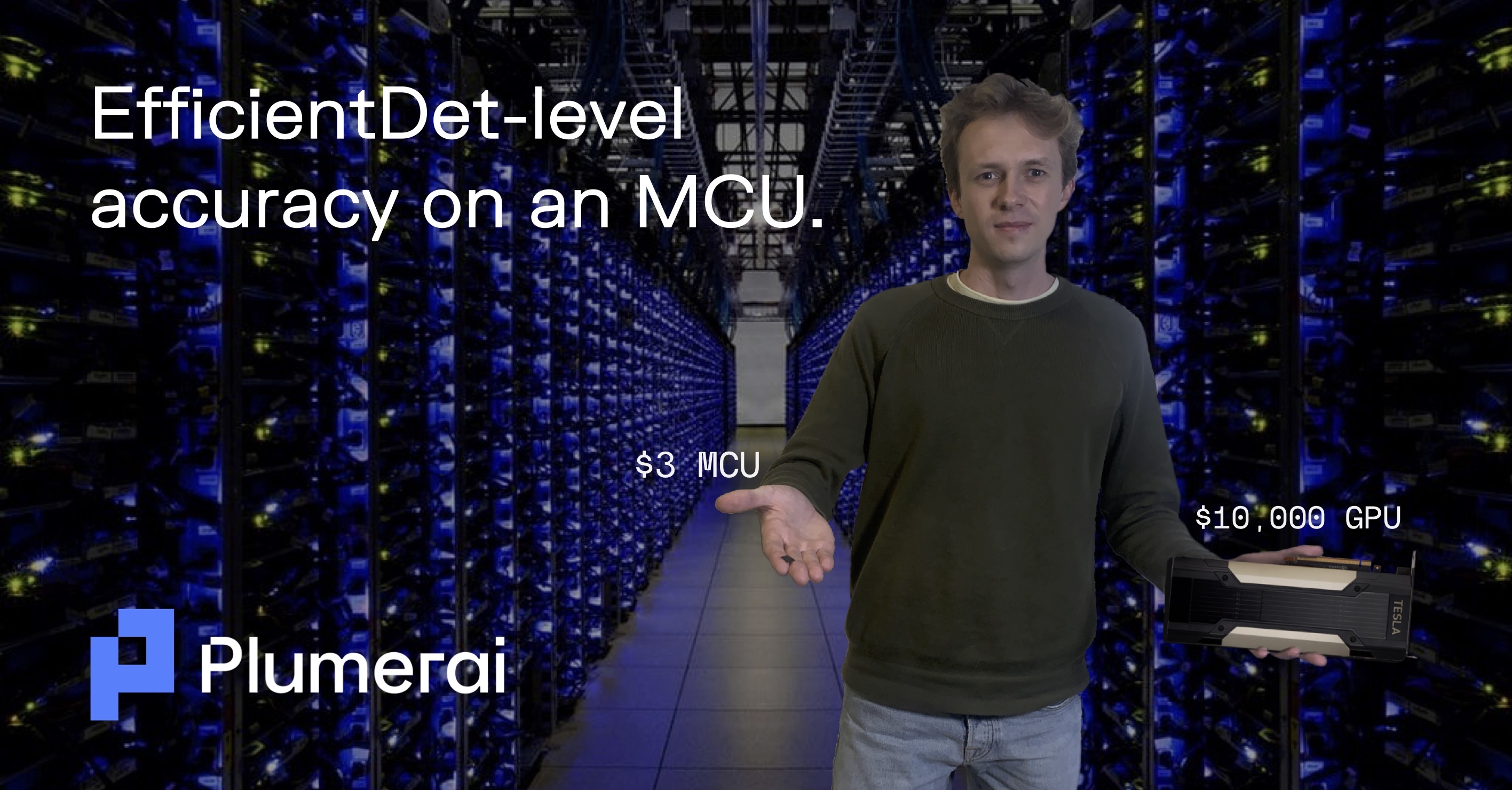

How we replaced a $10,000 GPU with a $3 MCU, while reaching EfficientDet-level accuracy.

What’s the point of making deep learning tiny if the resulting system becomes unreliable and misses key detections or generates false positives? We like our systems to be tiny, but don’t want to compromise on detection accuracy. Something’s got to give, right? Not necessarily. Our person detection model fits on a tiny Arm Cortex-M7 microcontroller and is as accurate as Google’s much larger EfficientDet-D4 model running on a 250 Watt NVIDIA GPU.

Person detection

Whether it’s a doorbell that detects someone walking up to your door, a thermostat that stops heating when you go to bed, or a home audio system where music follows you from room to room, there are many applications where person detection greatly enhances the user experience. When expanded beyond the home, this technology can be applied in smart cities, stores, offices, and industry. To bring such people-aware applications to market, we need systems that are small, inexpensive, battery-powered, and keep the images at the edge for better privacy and security. Microcontrollers are an ideal platform, but they don’t have powerful compute capabilities, nor much memory. Plumerai takes a full system-level approach to bring AI to such a constrained platform: we collect our own data, use our own data pipeline, and develop our own models and inference engine. The end result is real-time person detection on a tiny Arm Cortex-M7 microcontroller. Quite an achievement to get deep learning running on such a small device.

But what about accuracy?

Our customers keep asking this question too. Unfortunately, measuring accuracy is not that simple. A common approach is to measure accuracy on a public dataset, such as COCO. We have found this to be flawed, as many datasets are not accurate representations of real-world applications. For example, a model that performs better on COCO does not perform better in the field. This is because the dataset includes many images that are not relevant for a real-world use-case. In addition, there’s bias in COCO, as it was crowd-sourced from the internet. These images are mostly of situations that people found interesting to capture while always-on intelligent cameras mostly take pictures of uninteresting office hallways or living rooms. For example, the dark images in COCO include many from concerts with many people in view where a dark office or home is usually empty. The only right dataset to measure accuracy on is a relevant and clean dataset that is specifically collected for the target application. We do extensive, proprietary data collection and curation and decide to use our own test dataset to measure accuracy on. It goes without saying that this test dataset is completely independent and separate from the training dataset — collected in different locations with different backgrounds, situations, and subjects.

After selecting the dataset to measure accuracy on, we need to choose a model to compare to. We found Google’s state-of-the-art EfficientDet the most appropriate model for evaluation. EfficientDet is a large and highly accurate model that is scalable, enabling a range of performance points. EfficientDet starts with D0, which has a model size of 15.6MB, and goes up to D7x, which requires 308MB.

The surprising result? Our model is just as accurate as the pre-trained EfficientDet-D4. Both achieve an mAP of 88.2 on our test dataset. D4 has a model size of 79.7MB and typically runs on a $10,000 NVIDIA Tesla V100 GPU in a data center. Our model size is just 737KB, making it 112 times smaller. Using our optimized inference engine, our model runs in real-time on a tiny MCU with less than 256KB of on-chip RAM.

| Model | Plumerai Person Detection | Google EfficientDet‑D4 |

|---|---|---|

| Model size | 737 KB | 79.7 MB |

| Hardware | ArmCortex-M7 MCU | NVIDIA Tesla V100 GPU |

| Price | <$3 | $10,000 |

| Real-world person detection mAP | 88.2 | 88.2 |

Full stack optimization

Such a result can only be achieved with a relentless focus on the full AI stack.

First, choose your data wisely. A model is only as good as the data you train it with. We do extensive data collection and use our intelligent data pipeline for curation, augmentation, oversampling strategies, model debugging, data unit tests, and more.

Second, design and train the right model. Our model supports a single class, where EfficientDet can detect many. But such a multi-class model is not needed for our target application. In addition, we include our Binarized Neural Networks technology, significantly shrinking the network while also speeding it up.

Third, use a great inference engine. We developed a new inference engine from scratch, which greatly outperforms TensorFlow Lite for Microcontrollers. This inference engine works for both standard 8-bit as well as Binarized Neural Networks and does not affect accuracy.

Famous mathematician Blaise Pascal once said, “If I had more time, I would have written a shorter letter.” In deep learning, this translates to “If I had more time, I would have made a smaller model.” We did take that time, and it wasn’t easy, but we did build that smaller model. We’re excited to bring powerful deep learning to tiny devices that lack the storage capacity and the computational power for long letters.

Let us know if you’d like to continue the conversation. High accuracy numbers are nice, but nothing goes beyond running the model in the field. We’re always happy to jump on a call and show you our live demonstrations and discuss how we can bring AI to your products.